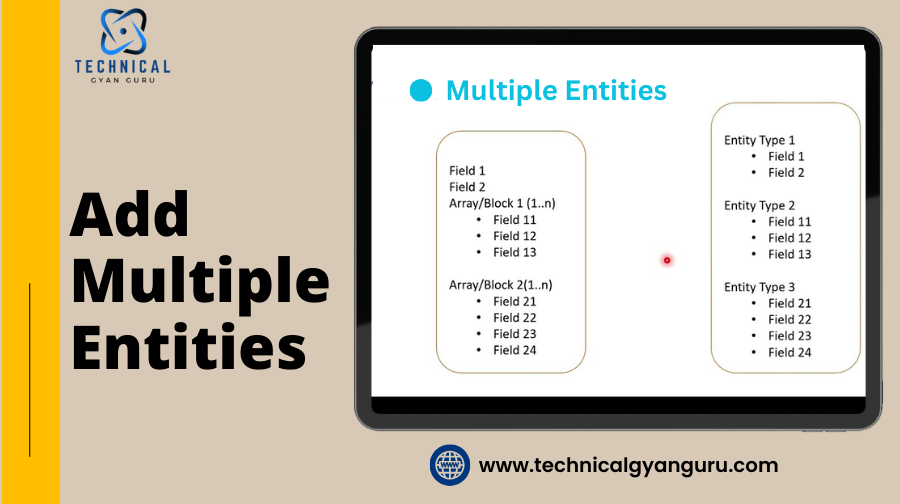

Learn how to efficiently create multiple entities within a single OData service operation in SAP. Discover techniques for optimizing data transfer and improving

In today’s data-driven world, efficiency and scalability in managing data operations are crucial. OData (Open Data Protocol) is a powerful protocol for creating and consuming RESTful APIs, and it provides robust capabilities for handling data. One of its advanced features is the ability to add multiple entities in a single operation. This capability can significantly streamline processes and reduce the number of server interactions needed. In this article, we’ll delve deep into how to add multiple entities in one operation in OData services, exploring the concepts, implementation steps, and best practices.

Understanding OData Entity Operations

GET https://services.odata.org/v4/TripPinServiceRW/People HTTP/1.1

OData-Version: 4.0

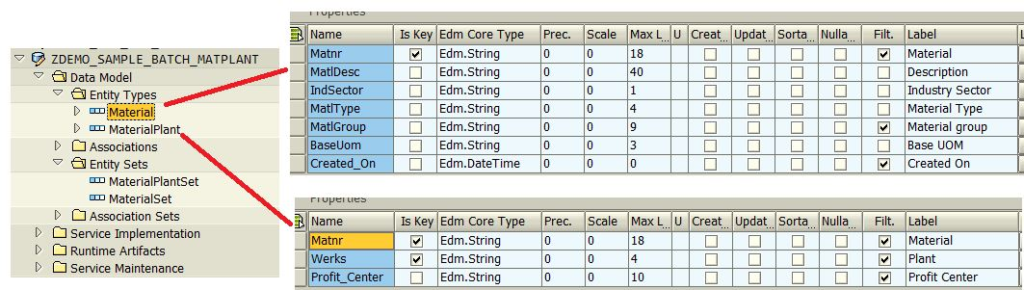

OData-MaxVersion: 4.0 OData enables CRUD (Create, Read, Update, Delete) operations over HTTP using standard methods (POST, GET, PUT, DELETE). When dealing with multiple entities, OData can handle batch requests efficiently. To add multiple entities in one operation, you use the batch processing feature, which allows you to combine multiple requests into a single HTTP request.

Why Add Multiple Entities in One Operation?

Adding multiple entities in one operation offers several advantages:

- Efficiency: Reduces the number of HTTP requests and responses, minimizing network overhead and latency.

- Atomicity: Ensures that multiple entities are added as a single transaction, which can help maintain data consistency.

- Reduced Complexity: Simplifies client-side logic by consolidating multiple operations into one request.

How to Add Multiple Entities Using OData Batch Processing

Batch processing in OData involves sending a single HTTP request that contains multiple individual requests, which are processed as a batch. This is done using the OData Batch protocol. Here’s a step-by-step guide on how to implement this:

1. Understand the Batch Request Format

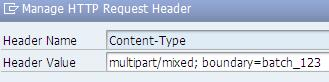

An OData batch request is composed of a single multipart MIME request that contains multiple parts. Each part represents a different OData request. The MIME type for batch requests is multipart/mixed, and each part is separated by a boundary string.

Example Batch Request Format:

cssCopy codePOST /MyService/$batch HTTP/1.1

Content-Type: multipart/mixed; boundary=batch_123

--batch_123

Content-Type: multipart/mixed; boundary=changeset_123

--changeset_123

Content-Type: application/http

Content-Transfer-Encoding: binary

POST /MyService/Entities HTTP/1.1

Content-Type: application/json

{

"Property1": "Value1",

"Property2": "Value2"

}

--changeset_123

Content-Type: application/http

Content-Transfer-Encoding: binary

POST /MyService/Entities HTTP/1.1

Content-Type: application/json

{

"Property1": "Value3",

"Property2": "Value4"

}

--changeset_123--

--batch_123--

2. Create the Batch Request

- Define the Batch Endpoint: The batch request is sent to the special

$batchendpoint of your OData service. For example,POST /MyService/$batch. - Include a Changeset: Within the batch request, include a changeset boundary that groups related operations. Each changeset can contain multiple

POSTrequests. - Specify HTTP Methods and Headers: Each individual request within the batch specifies the HTTP method (e.g.,

POSTfor creating entities), along with necessary headers and payload.

3. Implement Batch Processing in the OData Service

- Server-Side Handling: Your OData service should be configured to handle batch requests. This involves parsing the multipart request, processing each individual request, and sending back a response.

- Transaction Management: Ensure that the service processes all requests within a changeset as a single transaction. If one request fails, the entire changeset should be rolled back to maintain data consistency.

4. Handle the Batch Response

The response to a batch request also uses multipart MIME format. Each part of the response corresponds to an individual request in the batch, containing the result of that request.

Example Batch Response Format:

cssCopy codeHTTP/1.1 200 OK

Content-Type: multipart/mixed; boundary=batchresponse_123

--batchresponse_123

Content-Type: multipart/mixed; boundary=changesetresponse_123

--changesetresponse_123

Content-Type: application/http

Content-Transfer-Encoding: binary

HTTP/1.1 201 Created

Content-Type: application/json

{

"ID": 1,

"Property1": "Value1",

"Property2": "Value2"

}

--changesetresponse_123

Content-Type: application/http

Content-Transfer-Encoding: binary

HTTP/1.1 201 Created

Content-Type: application/json

{

"ID": 2,

"Property1": "Value3",

"Property2": "Value4"

}

--changesetresponse_123--

--batchresponse_123--

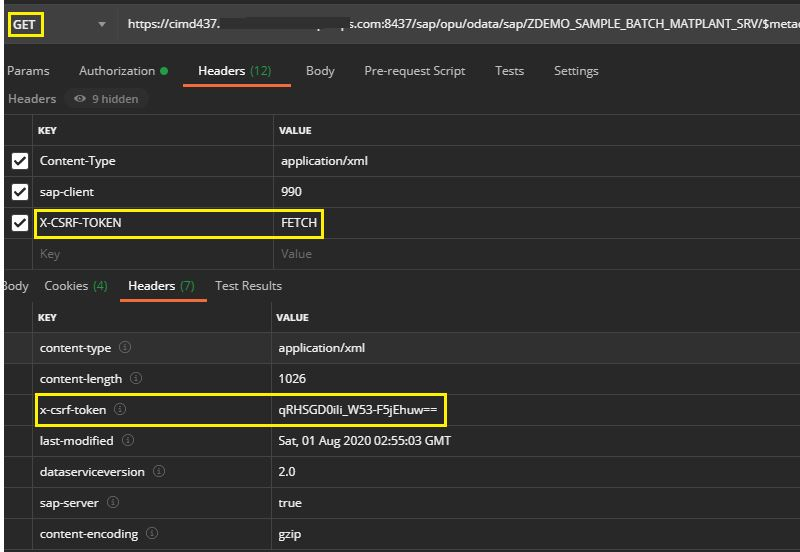

5. Testing and Validation

- Testing Tools: Use tools like Postman or custom scripts to test batch requests and responses. Verify that the service correctly processes the batch and returns the expected results.

- Error Handling: Implement robust error handling for scenarios where one or more requests fail. Ensure that errors are reported clearly and that any necessary rollback mechanisms are in place.

Best Practices for Using OData Batch Processing

- Optimize Batch Size: Avoid creating excessively large batches, as this can lead to performance issues. Find a balance between efficiency and manageability.

- Monitor Performance: Regularly monitor the performance of batch processing to identify and address any bottlenecks or issues.

- Secure Data Transmission: Ensure that batch requests and responses are transmitted over secure channels (e.g., HTTPS) to protect sensitive data.

- Document Batch Endpoints: Clearly document the batch processing capabilities and endpoints in your API documentation to help developers understand how to use them effectively.

Conclusion

Adding multiple entities in one operation using OData batch processing is a powerful technique that can enhance the efficiency and performance of your data operations. By understanding the batch request format, implementing batch processing in your OData service, and adhering to best practices, you can streamline your data interactions and improve the overall user experience. Whether you are developing a new application or optimizing an existing one, mastering OData batch processing will be a valuable skill in your toolkit.

For further exploration, consider diving into the OData specifications and experimenting with batch processing in your development environment. The flexibility and power of OData can unlock new possibilities for your data integration and management needs.

Read Our blog here:-

The Complete Guide to Configuring Jenkins Server on AWS EC2 Using DevSecOps Tools

How to Reset Your KIIT SAP Portal Password Quickly